There’s this old organizer wisdom that freedom is an endless meeting. How awful. Here the sprightly technologist steps in to ask:

“Does it have to be? Can we automate all that structure building and make it maintain itself? All the decision making, agenda building, resource allocating, note taking, emailing, and even trust?

We can; we must”

That’s the popular hypothesis, that technology should fix democracy by reducing friction and making it more efficient. You can find it under the hood of most web technologies with social ideals, whether young or old. The people in this camp don’t dispute the need for structure and process, but they’re quick to call it bureaucracy when it doesn’t move at the pace of life, and they’re quick to start programming when they notice it sucking up their own free time. Ideal governance is “the machine that runs itself“, making only light and intermittent demands for citizen input.

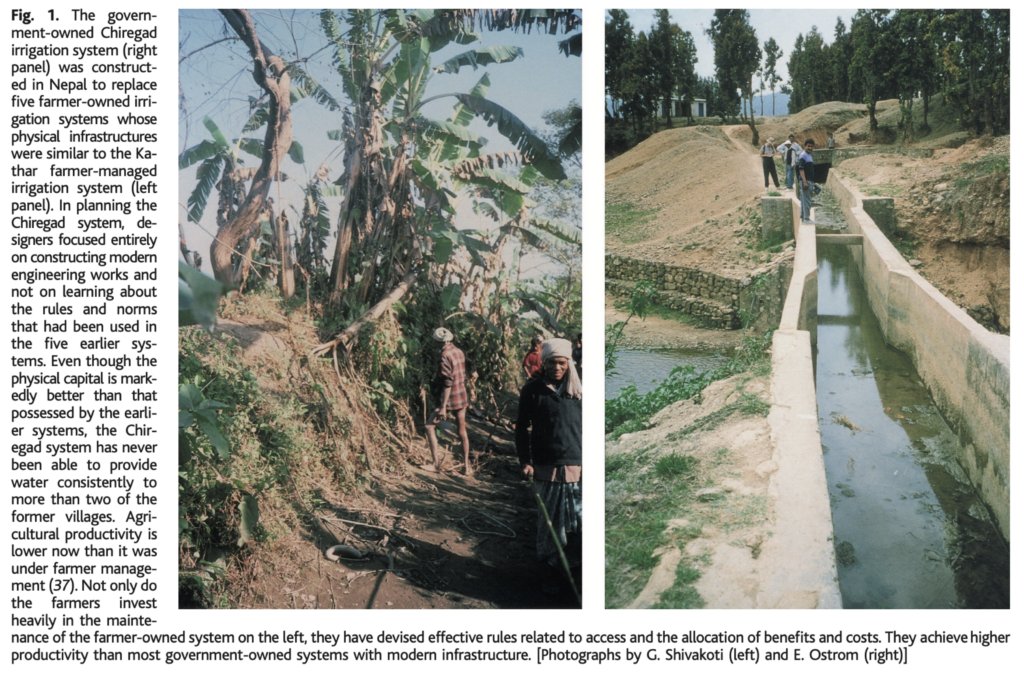

And against it is the unpopular hypothesis. What if part of the effectiveness of a governance system is in the tedious work of keeping it going? What if that work builds familiarity, belonging, bonding, sense of agency, and organizing skills? Then the work of keeping the system up is itself the training in human systems that every member needs to have for a community to become healthy. It instills in every member pragmatic views of collective action and how to get things done in a group. Elinor Ostrom and Ganesh Shivakoti give a case of this among Nepali farmers when state-funds replaced hard-to-maintain dirt irrigation canals with robust concrete irrigation canals and farmer communities stopped sharing water equitably. What looked like maintaining ditches was actually maintaining an obligation to each other.

That’s important because under the unpopular hypothesis, the effectiveness of a governance system depends less on its structure and process (which can be virtually anything and still be effective) and more on what’s in the head of each participant. If they’re trained, aligned, motivated, and experienced, any system can work. This is a part of Ostrom’s “institutional diversity”. The effective institution focuses on the members rather than the processes by making demands of everyone, or “creating experiences.”

Why are organizations bad computers? Because that isn’t their only goal.

In tech circles I see a lot of computing metaphors for organizations and institutions. Looking closer at that helps pinpoint the crux of the difference between the popular and unpopular hypotheses. In a computer or a program, many gates or function are linked into a flow that processes inputs into outputs. In this framework, a good institution is like a good program, efficiently and reliably computing outputs. Under the metaphor all real-world organizations look bad. In a real program, a function will compute reliably, quickly, and accurately without having to provide permission or buy-in or interest? In an organization each function needs all those things.

So organizations are awful computers. But that’s not a problem because it’s goal isn’t to compute, but to compute things that all the functions want computed. It’s a computer that exists by and for its parts. The tedium of getting buy-in from all the functions isn’t an impediment to proper functioning, it is proper functioning. The properly functioning organization-computer is constantly doing the costly hygiene of ensuring the alignment of all its parts, and if it starts computing an output wrong, it’s not a problem with the computer, it’s a problem with the output.

If the unpopular hypothesis is right, then we shouldn’t focus on processes and structures—those might not matter at all—but on training people, keeping them aligned with each other, and keeping the organization aligned with them. It supports another hypothesis I’ve been exploring, that all governance is onboarding.

Less Product, more HR?

This way of thinking opens a completely different way of thinking about governance. Through this lens,

- Part of the work of governance is agreeing what to internalize

- a rule is the name of the thing that everyone agrees that everyone should internalize.

- The other part of governing is creating a process that helps members internalize (whether via training, conversation, negotiation, even a live-action tabletop role playing simulation).

- once it’s internalized by everyone the rule is irrelevant and can be replaced by the next rule to work on.

In this system, the constraints on the governance system depend on human limits. You need rules because an org needs to be intentional about what everyone internalizes. You’ll keep needing rules because the world is changing and the people are changing and so what to internalize is going to change. You can’t have too many rules at one time because people can’t remember too rules-in-progress at once. You need everyone doing and deciding the work together because it’s important that the system’s failures feel like failures of us rather than them.

With all this, it could be tempting to call the popular hypothesis the tech friendly one. But there’s still a role for technology in governance systems following the unpopular hypothesis. It’s just a change in focus, into technologies that support habit building, skill learning, training, onboarding, and that monitor the health of the shared agreements underlying all of these things. It encourages engineers and designers to move from the easy problems of system and structure to the hard ones of culture, values, and internalization. The role of technology in supporting self-governance can still be to make it more efficient, but with a tweak: not more efficient at arranging parts into computations, but more efficient at maintaining its value to those parts.

Maybe freedom is an endless meeting and technology can make that palatable and sustainable. Or maybe the work of saving democracy isn’t in the R&D department, but HR.